Deep-pocketed tech giants have dominated the first phase of artificial intelligence.

However, the next phase promises to be different. Decentralized AI is democratizing access to data and models. One startup is leading this change.

We’re explaining what decentralized AI is, why it’s the future, and revealing one company building its foundation.

The Antidote to Monopoly

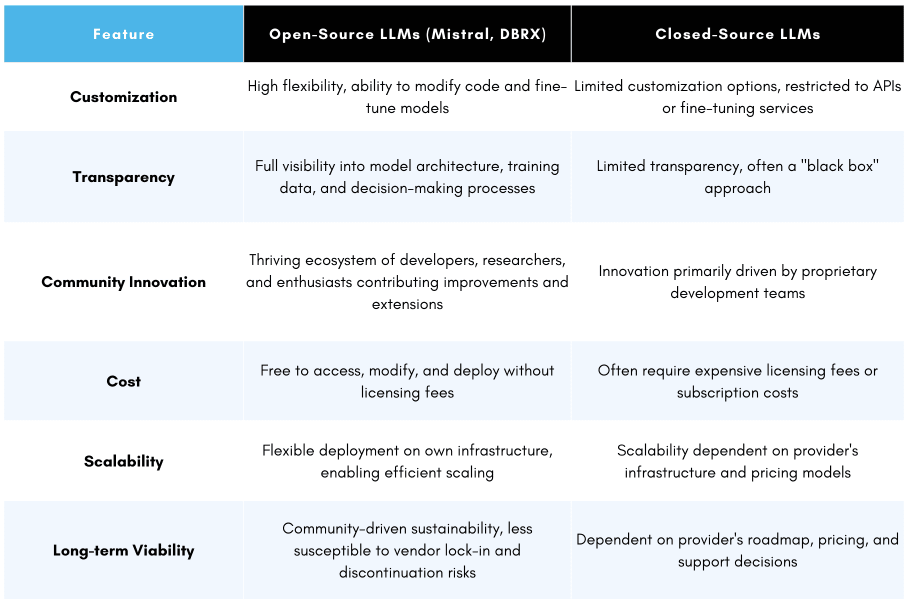

Open source versus closed source has been hotly debated since the beginning of the Internet.

When it comes to software, choosing between proprietary, restricted code, and customizable code might seem trivial but can have far-reaching consequences beyond just your own operations.

It’s an entirely different story with AI.

Close-sourcing artificial intelligence on central servers could have several long-term impacts:

- Collective human intelligence becomes concentrated among just a few

- Suppressed innovation and solutions

- High risk of bias and censorship

There’s a reason John D. Rockefeller’s Standard Oil empire came under antitrust scrutiny and was eventually broken up.

Google’s parent company, Alphabet, has been accused of the same monopolistic practices today.

The big difference is that AI has even farther-ranging implications than both oil and search.

Decentralized AI is the antidote to monopoly, and its adoption is on the rise.

What is Decentralized AI?

It’s 2030.

Everything is AI-native, meaning artificial intelligence is embedded into nearly every online service and app.

However, because AI is not the primary business of many and due to the prohibitive cost of building models from scratch and hosting them, licensing is looked at as the only alternative.

However, as the weeks and months pass you come to realize that the new technology has just as many problems as the old technology.

For starters, the data privacy risk is even greater, as a centralized model is now being trained on your proprietary data.

A significant proportion of CIOs have cited privacy as one of the most foremost concerns when adopting AI.

Second, delegating decision-making to a “black box” can lead to unfavorable results.

This is especially true when we don’t understand how the AI model reasons and produces the outputs that it does. One paper on AI opacity has reasoned that even some large language model (LLM) makers do not know why patterns are extracted from datasets.

Finally, centralized AI, by design, cannot be as scalable as its decentralized equivalent.

If you are planning to grow, add more employees (users) and expand your use of the technology over time, it is a problem.

Decentralized AI is the opposite of this.

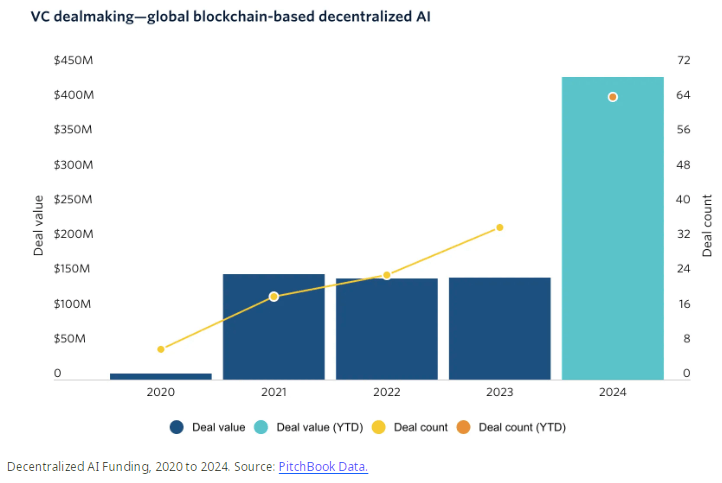

It’s a big reason why investment in the transformative technology increased by more than 200% in 2024.

Individual angels and firms are seeing exactly what we are…

That distributed LLM ownership, community model training, and decentralized compute infrastructure is a better value proposition.

However, for all of its benefits, Decentralized AI (DeAI) has gained less traction than closed proprietary models.

One collective is out to change this by keeping a developer-first approach, while building an open ecosystem for everyone.

AI For Everyone

“Can machines think?”

Artificial intelligence research began in earnest when mathematician and computer scientist Alan Turing raised this fundamental question.

Now, more than half a century later, the same thing can be said about the mission of “making AI open for everyone.”

Individual developers, public benefit corps like The Open Source Initiative (OSI), and startups have all aimed to make technology more accessible.

One company has taken such efforts to the next level.

In 2022 a small team of engineers, researchers, and product experts began working together to build completely open-source LLMs for local use.

This project turned into Nous Research, which has gone on to secure $70 million in funding, including a recent $50 million Series A round led by Paradigm.

Since its inception, Nous has released a series of open-source LLM models.

Large language models are what power chatbots like ChatGPT, which have become the primary way most people interact with AI.

The most advanced of Nous’ models is Hermes 3, a general LLM named after the mythological Greek god. It stands apart from other open-source models for several notable reasons.

First, it is the first full-parameter fine-tune of Meta’s Llama 3.1 8B model.

Already a top model for output speed and context, Nous’ curation of Llama has ensured exact and adaptive prompt execution. This occurs without refusing instructions on various grounds like most commercial models.

But censorship resistance is just the start of what Hermes has to offer.

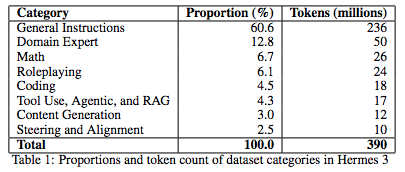

Trained using a modified version of Axolotl, which we previously went into depth about on this site, and reinforcement learning from human feedback. Hermes 3 covers a wide range of domains and use cases.

This includes conversational context retention, nuanced prompt understanding, and a proficiency in generating complex, functional

code snippets across multiple programming languages, among other things.

Advanced techniques like YaRN (context window extension of LLMs) and NTK Aware, which enables models to extend context size without fine-tuning) are also applied.

The end result is that Hermes is one of the top-ranked open LLM models on HuggingFace’s leaderboard. Additionally, it has also outperformed many other open-weight models in benchmark tests.

Several things have made such outperformance possible.

Compute and Community

Like any open model worth its weight, Nous is powered by a scalable, decentralized storage network.

For this, it has partnered with Lambda and its blockchain data storage clusters to optimize compute performance.

However, what has truly put Nous over the top has been its ability to cultivate community.

Its human-centric focus extends to crowdsourcing data curation and evaluation at every turn. From contribution-based incentives to participation models.

This combination of assured privacy, transparency, and scalability has led to Hermes 3 being downloaded more than 35 million times. Proving that AI really is for everyone.

However, Nous hasn’t just fine-tuned open-source models; it has set its ambitions much higher, to make the next phase of AI – Superintelligence (ASI), widely available to all.

A few months ago, Nous launched Psyche on Solana.

Named after the mythological Greek tale of Cupid and Psyche overcoming love’s obstacles, the first phase of Psyche, a decentralized AI training infrastructure, is all about enabling models to be trained by any participant or subset of trusted actors.

As development happens, Psyche has the real potential of accelerating AI reasoning.

Nous Research is a pioneer. It pushes decentralized AI forward with open models, chats, and now an API, which was just launched, making its models even more accessible.

As artificial intelligence continues to proliferate and more people make it part of their everyday life, so too will the demand for more personalized experiences.

Beyond enabling the unrestricted use of AI, the real power of decentralized AI lies in allowing anyone to create models that serve any use case or application.

The things that will be built with it will push all of humanity forward.

From this standpoint, the future depends on decentralized AI.

If you found this insightful, you will also like Science Fiction to Reality: A Look At Dexmate’s Next-Gen Robots or Game Changer: How TensorWave is Democratizing Access to Computational Power

If you would like more information on our thesis surrounding Decentralized AI or other transformative technologies, please email info@cadenza.vc